Understanding Tokenization in Large Language Models

Unlocking the Secrets of AI Language: A Dive into the World of Tokens.

Understanding Tokenization in Large Language Models

In the rapidly evolving landscape of artificial intelligence, the concept of tokenization plays a pivotal role, especially when it comes to understanding and generating human language.

As we delve into the intricacies of large language models (LLMs) like OpenAI's GPT series, it becomes essential to grasp what tokens are, how they are created, and their significance in the realm of natural language processing (NLP).

What is Tokenization?

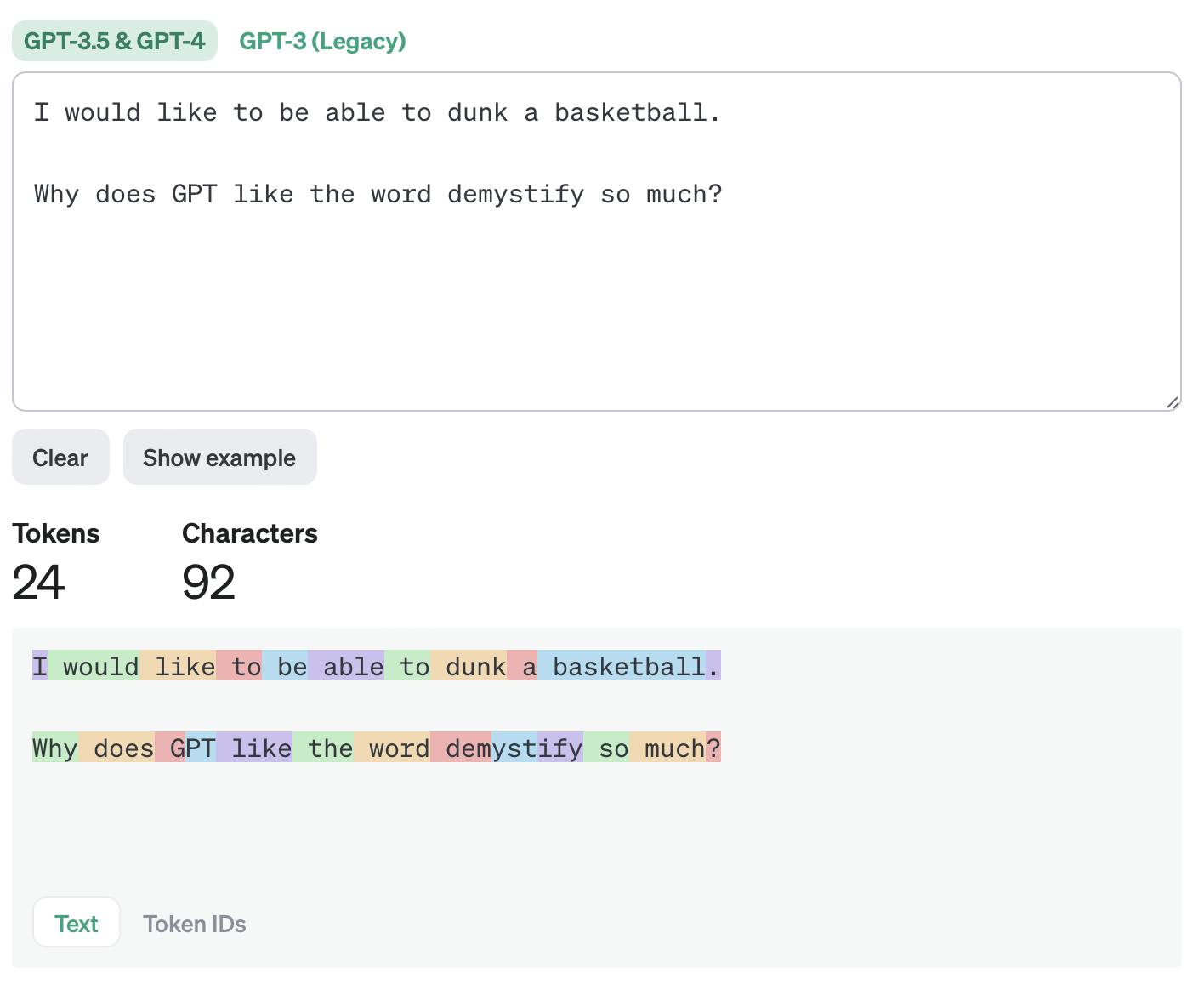

At its core, tokenization is the process of breaking down text into smaller pieces, known as tokens. These tokens can be words, parts of words, or even punctuation marks. However, tokenization in the context of LLMs is not as straightforward as splitting text at spaces or punctuation. Tokens can include trailing spaces, sub-words, or even multiple words, depending on the language and the specific implementation of the tokenizer.

Tokens: The Building Blocks of Language Understanding

Tokens serve as the fundamental building blocks that allow LLMs to process and understand text. By converting text into a sequence of tokens, these models can analyze and generate language in a structured manner. This token-based approach enables the models to capture the nuances of language, including grammar, syntax, and semantics.

How Does Tokenization Work?

The process of tokenization varies between models, but many LLMs, including the latest versions like GPT-3.5 and GPT-4, utilize a modified version of byte-pair encoding (BPE). This method starts with the most basic elements—individual characters—and progressively merges the most frequently occurring adjacent characters or sequences into single tokens. This approach allows the model to efficiently handle a vast range of language phenomena, from common words to rare terms, idioms, and even emojis.

The Role of Tokens in Language Models

Tokens are not just placeholders for words; they are the lens through which LLMs view the text. Each token is associated with a vector that represents its meaning and context within the language model's training data. When processing or generating text, the model manipulates these vectors to produce coherent, contextually appropriate language.

The Importance of Tokenization

Tokenization is critical for several reasons:

Efficiency: It allows models to process large texts more efficiently by breaking them down into manageable pieces.

Flexibility: Tokenization enables LLMs to handle a wide variety of languages and linguistic phenomena, including morphologically rich languages where the relationship between words and their meanings is complex.

Scalability: By standardizing the input and output of the model into tokens, developers can design systems that scale to different languages and domains without extensive modifications.

Practical Implications

Understanding tokenization and its implications can greatly influence how we interact with and implement LLMs. For instance, the token limit in models like GPT-4 affects how much text can be processed or generated in a single request. This constraint necessitates creative problem-solving, such as condensing prompts or breaking down tasks into smaller chunks.

Moreover, the tokenization process's language dependence highlights the need for careful consideration when deploying LLMs in multilingual contexts. Languages with a higher token-to-character ratio may require more tokens to express the same amount of information, impacting the cost and feasibility of using LLMs for those languages.

How words are split into tokens is also language-dependent. For example ‘Cómo estás’ (‘How are you’ in Spanish) contains 5 tokens (for 10 chars). The higher token-to-char ratio can make it more expensive to implement the API for languages other than English.

- OpenAI

Conclusion

Tokenization is a foundational concept in the world of large language models, underpinning the remarkable capabilities of AI to understand and generate human language. As we continue to explore and expand the boundaries of what AI can achieve, a deep understanding of tokenization will remain crucial for anyone working in the field of artificial intelligence and natural language processing.

Whether you're developing new applications, optimizing existing systems, or simply curious about how AI understands language, the journey begins with tokens.